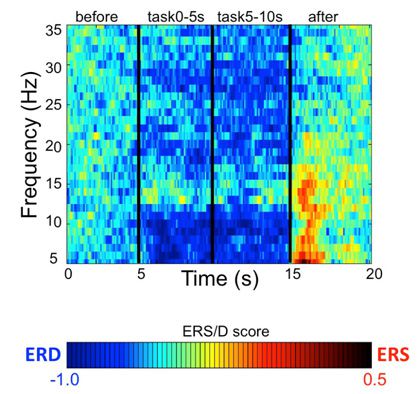

Power spectrum during silent reading at C3 (left-central region).

Speech production is one of the most important components in human communication. However, the cortical mechanisms governing speech are not well understood because it is extremely challenging to measure the activity of the brain in action, that is, during speech production.

Now, Takeshi Tamura and Michiteru Kitazaki at Toyohashi University of Technology, Atsuko Gunji and her colleagues at National Institute of Mental Health, Hiroshige Takeichi at RIKEN, and Hiroaki Shigemasu at Kochi University of Technology have found modulation of mu-rhythms in the cortex related to speech production.

The researchers measured EEG (electroencephalogram) with pre-amplified electrodes during simulated vocalization, simulated vocalization with delayed auditory feedback, simulated vocalization under loud noise, and silent reading. The authors define ‘mu-rhythm’ as a decrease of power in 8-16Hz EEG during the task period.

The mu-rhythm at the sensory-motor cortical area was not only observed under all simulated vocalization conditions, but was also found to be boosted by the delayed feedback and attenuated by loud noises. Since these auditory interferences influence speech production, it supports the premise that audio-vocal monitoring systems play an important role in speech production. The motor-related mu-rhythm is a critical index to clarify neural mechanisms of speech production as an audio-vocal perception-action system.

In the future, a neurofeedback method based on monitoring mu-rhythm at the sensory-motor cortex may facilitate rehabilitation of speech-related deficits.