Prof. Sunghoon Im, DGIST (right) & a degree-linked course student, Seunghoon Lee(left)

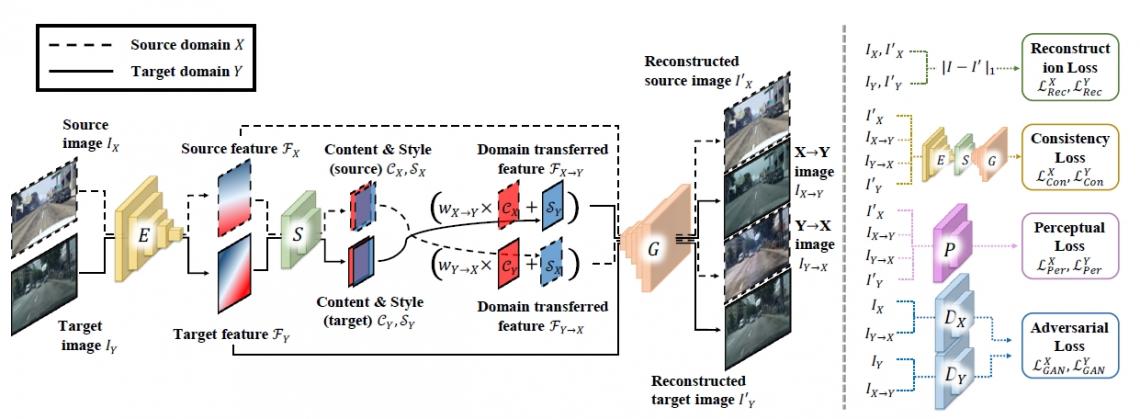

Neural network structure designed by Professor Sunghoon Im’s research team at DGIST

Prof. Sunghoon Im, from the Department of Information & Communication Engineering, DGIST, developed an artificial intelligence(AI) neural network module that can separate and convert environmental information in the form of complex images using deep learning. The developed network is expected to significantly contribute to the future advancement in the field of AI, including image conversion and domain adaptation.

Recently, deep learning, the basis of AI technology, has been increasingly advanced, and accordingly, deep learning research on image creation and conversion has been actively conducted. Conventional studies have focused on finding image information that is common in a domain, which is a set of images with multiple similar features. Thus, image information could not be properly used, limiting the performance of applicable data and models. Another limitation is that, because the image information used has a linearly simple structure, only one converted image can be obtained.

Professor Im’s research team hypothesized that the structure of image information may vary depending on the domain, and the structure may not always be simple, such as a linear structure. The research team designed a separator that could clearly divide image information into overall form information and style information. Based on this, they used a different weight for each domain to reflect the difference between the domains. Furthermore, they successfully developed a neural network structure to determine appropriate style information for each image composition using the correlation between the separated pieces of image information.

The developed neural network exhibits the advantage that image conversions can be easily performed for many domains, even with just one model. When the developed domain adaptation algorithm was applied to a visual recognition problem, the accuracy increased by more than double.

Prof. Im says that “In this study, a neural network that incorporates a new analysis for image information was developed, and we expect that if the relevant technology is improved a little more in the future, it can be applied to several fields, positively impacting the development of AI.”

Seunghoon Lee, a degree-linked course student majoring in Information and Communication Engineering, participated in this research as the first author. Furthermore, the paper was published in the IEEE Conference on Computer Vision and Pattern Recognition, a leading international journal in the AI field, and released online on Friday, June 25.