□ Daegu Gyeongbuk Institute of Science and Technology (DGIST; President Young Kuk) announced on October 12 (Thursday) that a research team led by Professor Sung-hoon Im at the Department of Electrical Engineering and Computer Science has successfully developed a multi-task deep learning technique using neural network architecture discovery technology.[1] By allowing multiple tasks to be performed simultaneously without impairing their performance, this technique is expected to be applicable in a variety of fields in the future, such as in the development of small devices and autonomous driving technologies in which it is necessary to perform multiple tasks efficiently.

□ In recent studies on multi-task learning, there has been a problem that the overall performance decreases when less related tasks are learned together in an integrated neural network. To overcome this problem, previous studies have attempted to change the neural network architecture using dynamic neural network technology, but such an approach has the disadvantage of being difficult to search for a variety of neural network architectures.

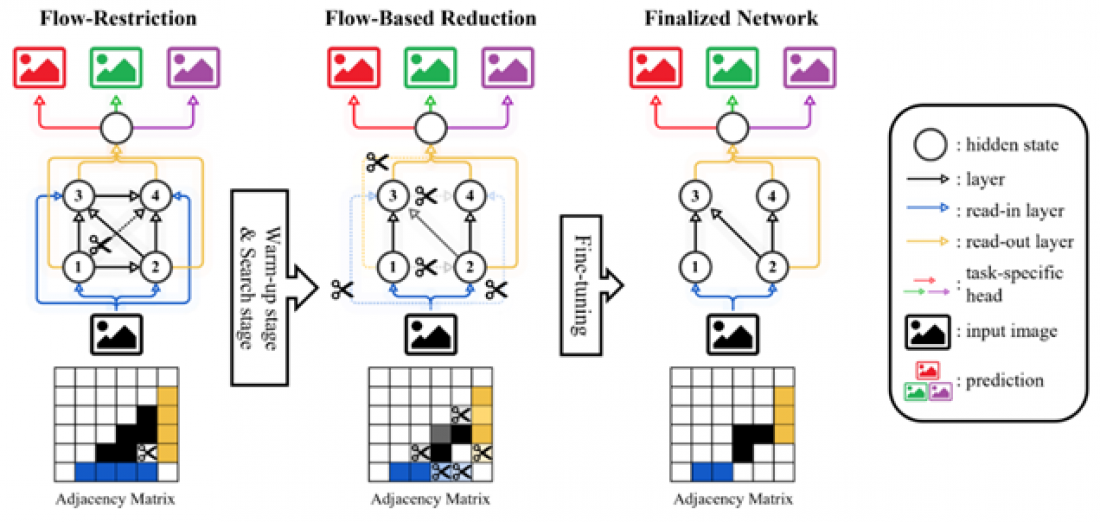

□ Professor Im's research team approached this problem from a different perspective. While previous studies searched for neural networks in a limited linear search space, Professor Im's research team proposed a method that expands the traditional linear search space and learns the relationships between existing tasks while simultaneously finding a neural network optimized for each task.

□ Further, the research team proposed a technique that can efficiently use the searched neural network architecture’s computational resources while maintaining performance as much as possible. By saving these computational resources, the team’s proposed method can effectively improve the execution speed of an algorithm. It is expected to find practical and widespread use in the future in AI fields that require multiple tasks to be performed at high speed, such as autonomous driving and robotics.

□ Professor Sung-hoon Im of the Department of Electrical Engineering and Computer Science, DGIST, stated, “The neural network search technique developed in this research transcends the existing narrow framework of artificial intelligence and brings us a step closer to general-purpose artificial intelligence. If related technologies are further improved in the future, we expect it to be applied in many different fields and have a positive impact on advances in generalized artificial intelligence technology.”

□ Meanwhile, the research results were published in June 2023 at the IEEE Conference on Computer Vision and Pattern Recognition, one of the most prestigious international conferences in the field.

corresponding author E-mail address : [email protected]

[1] Neural network architecture discovery technology: A technology that automatically finds a model’s architecture in machine learning and deep learning. It automatically tries different models, finds themodel that works best, and creates an optimal model.