□ A research team led by Professor Sang-hyun Park of the Department of Robotics and Mechanical Engineering/Artificial Intelligence, Daegu Gyeongbuk Institute of Science and Technology (DGIST; President Young Kuk), collaborated with a research team from Stanford University in the US to develop the federated learning AI technology, which enables large-scale model learning without sharing personal information or data. By allowing efficient learning of models, this technology, which can be used jointly by multiple institutions, is expected to contribute significantly to medical imaging analytics.

□ There has been strong concern about privacy violations when allowing deep learning models to learn in the medical field, as the data contain patients’ personal information. This has made it difficult to collect data from individual hospitals in a central server to develop a large-scale model that could be used jointly by multiple hospitals.

□ As a solution to address this problem, federated learning does not gather data in the central server but instead only collects models that have learned at each of the hospitals or institutions and sends them to the central server for learning. Nevertheless, there are difficulties with sending models to the central server several times. More importantly, for hospitals that need to store patient data securely, substantial money and time are needed to repeatedly transfer models to the central server. Hence, the number of model transfers must be minimized.

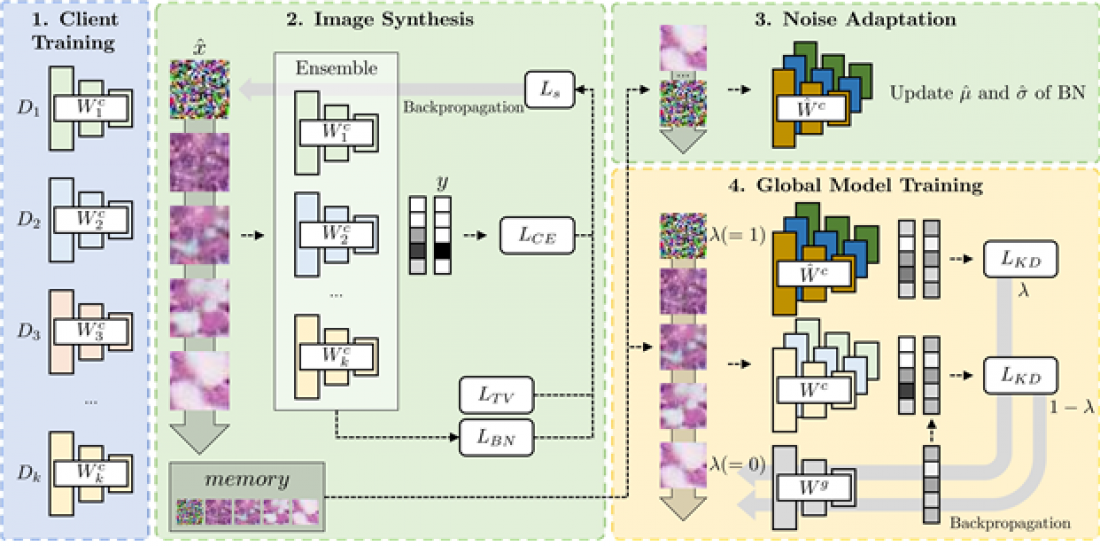

□ Professor Park’s research team successfully developed a method to minimize the number of model transfers while maintaining and improving model performance through image generation and knowledge distillation.[1] This method uses images and models generated by institutions to allow models to learn on the central server, thereby improving the process of model learning.

□ The research team used this technology to perform classification tasks with microscopy, microscopic images, dermatoscopy, optical coherence tomography (OCT), pathology, X-ray, and fundus images. The results confirmed the technology’s outstanding classification performance compared with other conventional federated learning techniques.

□ Professor Park of the Department of Robotics and Mechanical Engineering, DGIST, stated, “This research will allow models to learn universally across all institutions participating in learning without sharing personal information or data. This technology is expected to markedly reduce the cost of developing large-scale AI models in a variety of medical settings.”

□ In particular, the study was conducted in collaboration with a research team at Stanford University in the US. Along with this research, Professor Park’s research team conducted other studies with Stanford University to generate realistic brain MRI images. The research allowed them to develop a conditional diffusion model that generates 3D brain MRI images by conditionally receiving 2D brain MRI slides as inputs. Compared with traditional brain MRI generation models, it can generate high-quality images with less memory and is therefore expected to play a crucial role in the medical field.

□ This research was conducted through DGIST’s General Project and the National Research Foundation of Korea’s New Research Support Project. The results were recognized for excellence and published in October 2023 in Medical Image Computing and Computer Assisted Intervention (MICCAI), one of the top journals in imaging analysis.

corresponding author E-mail address : [email protected]

[1] Knowledge distillation: A technique for training a network intended for use based on a previously trained network.