This story is featured in the Asia Research News 2022 magazine. If you would like to receive regular research news, join our growing community.

Get the news in your inbox

Technology that can reliably assess the accuracy of sign language promises a step change to help hearing people better communicate with the deaf community.

Employing artificial intelligence (AI)-based sign language recognition, a new game called SignTown teaches the use of signs for typical activities and, crucially, provides immediate feedback on player accuracy.

The game is a collaboration between The Chinese University of Hong Kong (CUHK), Google, The Nippon Foundation and Kwansei Gakuin University in Japan. It was launched on 23 September 2021, the International Day of Sign Language.

Nearly one in every 1,000 babies worldwide are born with hearing impairments. “There is a real need for hearing people who live and deal with this population to learn sign language and be able to communicate with them,” says Felix Sze of CUHK’s Centre for Sign Linguistics and Deaf Studies (CSLDS). “SignTown aims to bridge the gap between the two worlds.”

SignTown is the first step of Project Shuwa, which aims to significantly improve sign language recognition models. The research team believes that by teaching hearing people to sign, the online game will help the deaf community feel more included.

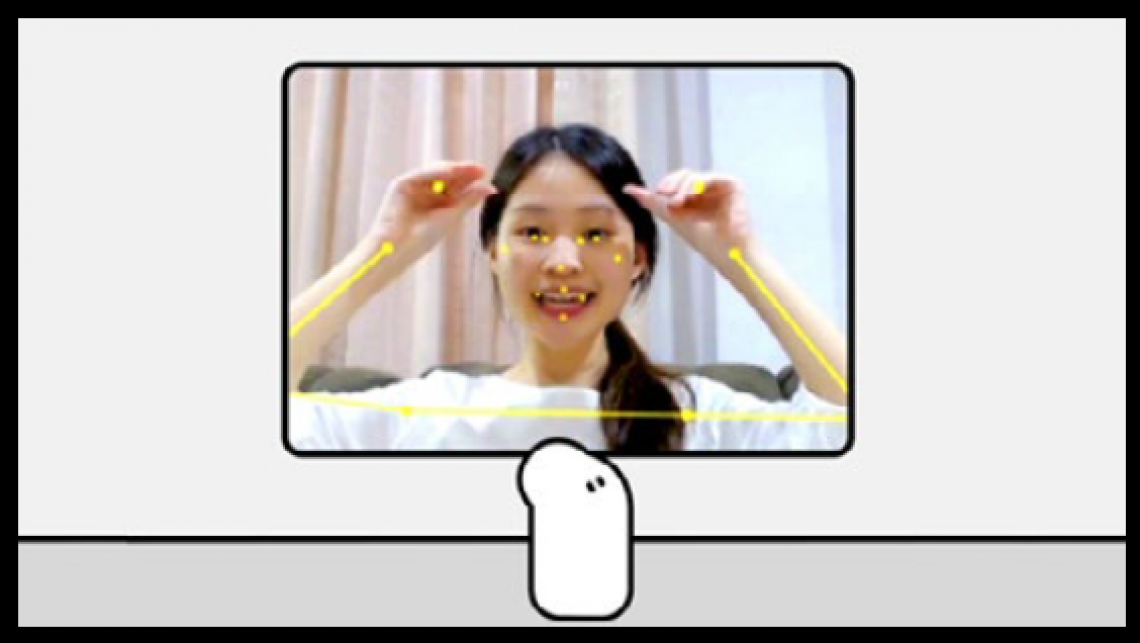

The game puts players in a virtual town where sign language is the official way to communicate. They use sign language in front of their computer cameras to complete tasks, such as packing for a trip, staying at a hotel, or ordering food in a café. The AI-powered recognition model provides immediate feedback on signing accuracy.

Previous sign language recognition models were not accurate enough because of weaknesses in the way they analysed visual-gestural language data. Sign language involves a range of gestural information, including hand, head and body movements, facial expressions and mouth shapes. Signing without these subtleties can lead to messages that are grammatically incorrect or that are difficult to interpret.

While sign languages vary from one country to another, phonetic features, including hand shapes, orientations and movements, are universal and the number of possible combinations is finite, making recognition models possible. Typically, complicated equipment, such as 3D cameras and gloves with sensors, has been needed to recognise signing in a 3D space.

Project Shuwa addressed this by developing a machine learning model that can use a standard camera to recognise, track and analyse 3D hand and body movements, as well as facial expressions.

The game allows users to choose between Japanese or Hong Kong Sign Language, with more options to become available in the future.

The project next aims to generate a sign dictionary and an automatic translation model that recognises sign language conversations and converts them into spoken language using computer and smartphone cameras.

“There is still a long way to go, but SignTown is opening the door to many more opportunities for people around the world to learn about sign language in real life situations,” says CSLDS computer officer Cheng Ka Yiu, who was involved in the development of the game. “We are latching onto advanced technology to build an inclusive and harmonious society with equal opportunities.”

Further information:

Professor Felix Sze and Mr Cheng Ka Yiu

E-mail: [email protected], [email protected]

Centre for Sign Linguistics and Deaf Studies

Department of Linguistics and Modern Languages

The Chinese University of Hong Kong

We welcome you to reproduce articles in Asia Research News 2022 provided appropriate credit is given to Asia Research News and the research institutions featured.