□ Professor Sang-hyun Park at the Department of Robotics and Mechatronics Engineering, Daegu Gyeongbuk Institute of Science and Technology (DGIST; President Young Kuk), has successfully developed domain-adaptive artificial intelligence (AI) model technology specialized in medical images. This technology enables accurate analysis without compromising performance even if medical images are obtained from different scanners or settings. It is expected to greatly contribute to the medical field by saving time and cost compared with the traditional method of building new training data and creating labels whenever any change occurs in the characteristics of images.

□ In medical AI, performance often drops sharply when an image different from the image format used for training is entered into the model. For instance, performance may decrease if MRI data obtained by Company A's scanner is entered into a model trained by MRI data acquired by Company B's scanner. Further, when multimodality[1] images, such as from computerized tomography (CT) and magnetic resonance imaging (MRI), are not easy to obtain, a dataset converted from CT images to MRI ones can be generated to conduct a more accurate analysis. Although studies have recently suggested changing the style of images, most of them focus on converting the style of ordinary images, which often leads to a structural deformation during image conversion. Medical images used for accurate medical diagnosis, however, should exhibit no structural deformation in organs, blood vessels, and lesions.

□ Therefore, to ensure accurate analysis for medical images, it is necessary to develop technology that prevents a decline in performance and minimizes a structural deformation. In this context, Professor Park’s research team successfully developed technology that can minimize structural deformation in images by using the mutual information loss function[2] during image conversion.

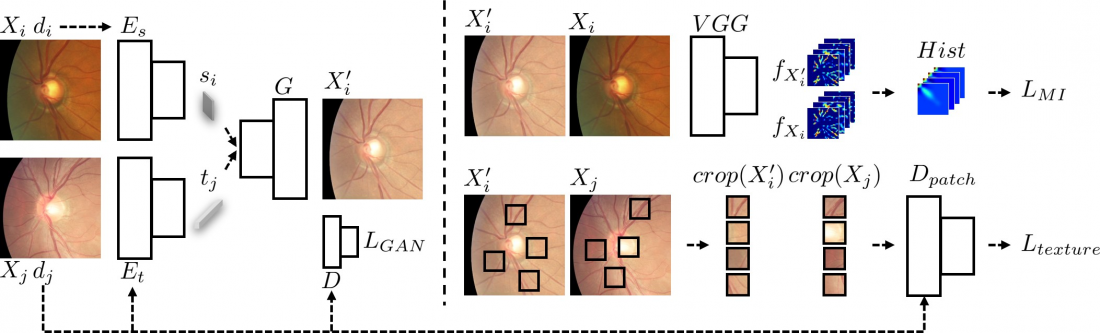

□ This technology extracts structural information from medical images and texture information from images in a new domain, and generates realistic images based on the discriminator loss function.[3] To generate images with fewer structural deformations while maintaining the texture information of the new domain, it also uses the texture co-occurrence loss function[4] and mutual information loss function. This technology can be used to generate images in a new domain and enable domain adaptation if a deep learning model is trained with these generated images.

□ Professor Park’s research team used this technology for domain adaptation by generating opposite images with different modalities for fundus[5] images, prostate MRI, cardiac CT, and MRI regions collected from various centers. The results confirmed that the technology generated images with different modalities while maintaining structure and that it performs better than traditional domain adaptation and image conversion techniques.

□ Professor Sang-hyun Park at the Department of Robotics and Mechatronics Engineering, DGIST, said, “In this study, we have successfully developed technology that can significantly save the time and cost required to train a new artificial intelligence model whenever the domain changes in the medical field. This technology is expected to greatly contribute to the development of diagnostic software applicable across various healthcare sites.”

□ This study was funded by the Ministry of Trade, Industry and Energy's Field Demand-Based Medical Device Advanced Technology Development Project and the National Research Foundation of Korea's New Researcher Support Project, and its results have been recognized for excellence and published in Pattern Recognition, a top journal in image analysis.

corresponding author E-mail Address : [email protected]

[1] Multimodality: It refers to multiple types of information or data.

[2] Mutual information loss function: It is sensitive to even a small structural deformation and can be used to enable image conversion that prevents a small structural deformation.

[3] Discriminator loss function: It is a loss function that contributes to generating realistic images, and calculates the loss based on a discriminator that distinguishes between real and generated images.

[4] Texture co-occurrence loss function: It is a loss function that generates images whose texture is deformed; it contributes to texture deformation by reducing the difference between the domain of an image generated by the loss function and the domain cropped from an image with the targeted texture.

[5] Fundus: It refers to the back of the eyeball.