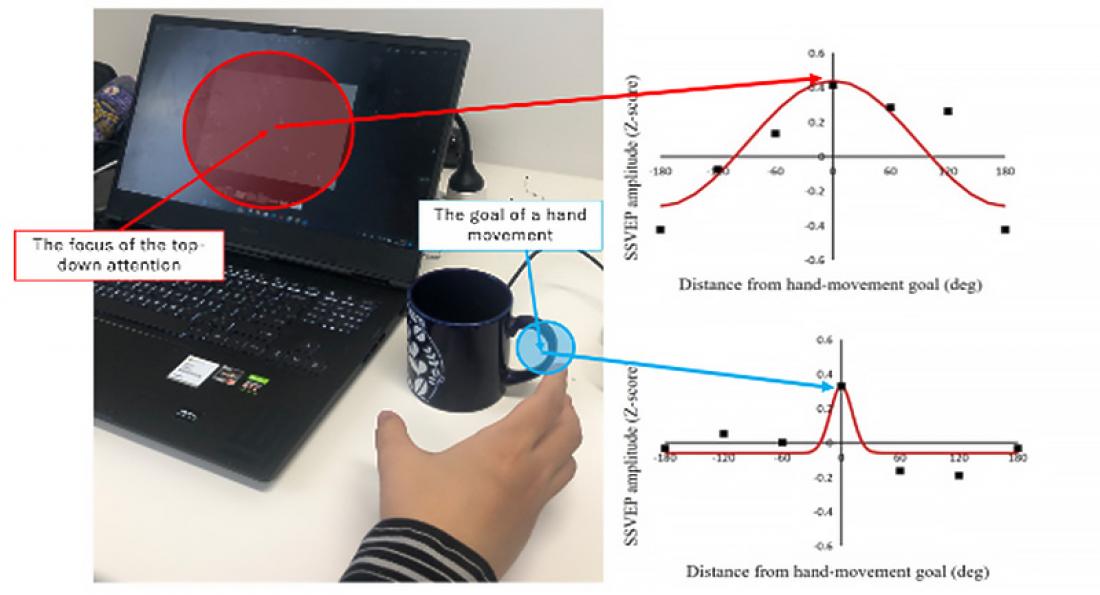

People can perform tasks simultaneously, directing their attention to different locations for different tasks. For example, when reaching for a coffee mug while working on a PC, attention could be directed to the cup whilst keeping your attention on the display. Attention to the cup is related to hand movement, which could be different from top-down attention to the display. The study's results showed a difference in spatial profile between the two types of attention. The spatial extent of the attention to the hand-movement goal (bottom right) is much narrower than top-down attention (top right). This suggests that there is an attention mechanism that moves to the location of where the hand intends to go, independent of top-down attention.

Our hands do more than just hold objects. They also facilitate the processing of visual stimuli. When you move your hands, your brain first perceives and interprets sensory information, then it selects the appropriate motor plan before initiating and executing the desired movement. The successful execution of that task is influenced by numerous things, such as ease, whether external stimuli are present (distractions), and how many times someone has performed that task.

Take, for example, a baseball outfielder catching a ball. They want to make sure that when the ball heads their way, it ends up in their glove (the hand-movement goal). Once the batter hits the ball and it flies towards the outfielder, they begin to visually perceive and select what course of action is best (hand-movement preparation). They will then anticipate where they should position their hand and body in relation to the ball to ensure they catch it (future-hand location).

Researchers have long since pondered whether the hand-movement goal influences endogenous attention. Sometimes referred to as top-down attention, endogenous attention acts like our own personal spotlight; we choose where to shine it. This can be in the form of searching for an object, trying to block out distraction whilst working, or talking in a noisy environment. Elucidating the mechanisms behind hand movements and attention may help develop AI systems that support the learning of complicated movements and manipulations.

Now, a team of researchers at Tohoku University has identified that the hand-movement goal attention acts independently from endogenous attention.

"We conducted two experiments to determine whether hand-movement preparation shifts endogenous attention to the hand-movement goal, or whether it is a separate process that facilitates visual processing," said Satoshi Shioiri, a researcher at Tohoku University's Research Institute of Electrical Communication (RIEC), and co-author of the paper.

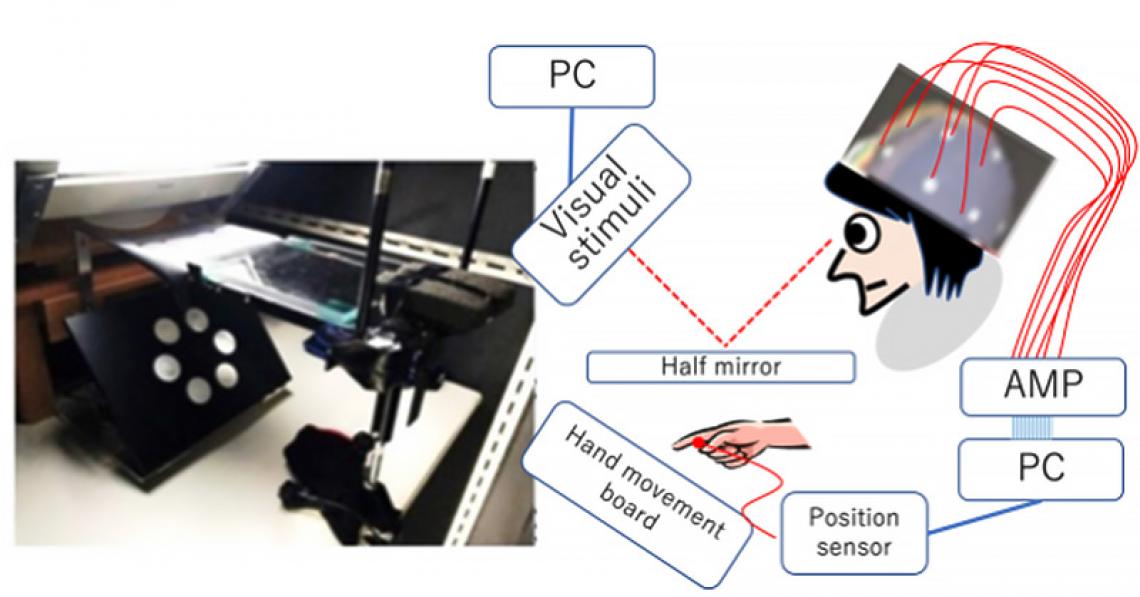

In the first experiment, researchers isolated the attention of the hand-movement goal from top-down visual attention by having participants move their hands to either the same location as a visual target or a differing location to the visual target based on cues. Participants could not see their hands. For both cases, there was a control condition where the participants were not asked to move their hand.

Visual stimuli were presented through a half mirror so that their hands were not visible to participants during the experiment. EEG signals and hand movements were measured and analyzed later.

The second experiment examined whether the order in cues to the hand-movement goal and the visual target impacted visual performance.

Satoshi and his team employed an electroencephalogram (EEG) to measure the brain activity of participants. They also focused on steady state visual evoked potential (SSVEP). When a person is exposed to a visual stimulus, such as a flashing light or moving pattern, their brain produces rhythmic electrical activity at the same frequency. SSVEP is the change in EEG signal that occurs, and this helps assess the extent to which our brain selectively attends to or processes visual information, i.e, the spatial window.

"Based on the experiments, we concluded that when top-down attention is oriented to a location far from the future hand location, the visual processing of future hand location still occurs. We also found that this process has a much narrower spatial window than top-down attention, suggesting that the processes are separate," adds Satoshi.

The research group is hopeful the knowledge from the study can be applied to develop systems that maintain appropriate attention states in different occasions.

Details of the research were published in the Journal of Cognitive Neuroscience on May, 8, 2023.