Researchers investigate the use of generative AI in academic research collection: accuracy and efficiency levels differ depending on the AI used

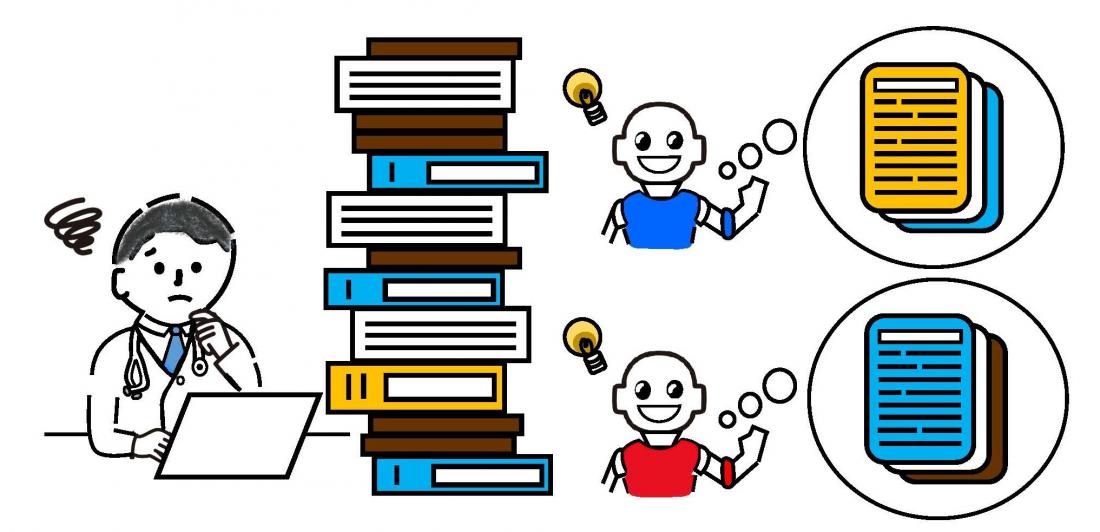

Can AI save us from the arduous and time-consuming task of academic research collection? An international team of researchers investigated the credibility and efficiency of generative AI as an information-gathering tool in the medical field.

The research team, led by Professor Masaru Enomoto of the Graduate School of Medicine at Osaka Metropolitan University, fed identical clinical questions and literature selection criteria to two generative AIs; ChatGPT and Elicit. The results showed that while ChatGPT suggested fictitious articles, Elicit was efficient, suggesting multiple references within a few minutes with the same level of accuracy as the researchers.

“This research was conceived out of our experience with managing vast amounts of medical literature over long periods of time. Access to information using generative AI is still in its infancy, so we need to exercise caution as the current information is not accurate or up-to-date.” Said Dr. Enomoto. “However, ChatGPT and other generative AIs are constantly evolving and are expected to revolutionize the field of medical research in the future.”

Their findings were published in Hepatology Communications.

Conflicts of interest

Cheng-Hao Tseng is on the speakers’ bureau for Roche. Yao-Chun Hsu consults, advises, is on the speakers’ bureau, and received grants from Gilead. He is on the speakers’ bureau and received grants from AbbVie, Bristol-Myers Squibb, and Roche. He advises Sysmex and is on the speakers’ bureau for MSD. Mindie Nguyen consults and received grants from Gilead, GlaxoSmithKline, and Exact Science. She consults for Intercept and Exelixis. She received grants from Pfizer, Enanta, AstraZeneca, Delfi, Innogen, Curve Bio, Vir, Healio, NCI, and Glycotest. The remaining authors have no conflicts to report.

###

About OMU

Osaka Metropolitan University is the third largest public university in Japan, formed by a merger between Osaka City University and Osaka Prefecture University in 2022. OMU upholds "Convergence of Knowledge" through 11 undergraduate schools, a college, and 15 graduate schools. For more research news, visit https://www.omu.ac.jp/en/ or follow us on Twitter: @OsakaMetUniv_en, or Facebook.